You know window cleaning is easy, but you must be a pro at certain altitudes.

As the adage says, never roll your own crypto. And if you have the wisdom of an old Chinese programmer, you know it’s true.

This post opens by discussing how hard it is to get encryption right in software and the learnings from past mistakes. Then, it discusses the challenges of using encryption in backend applications, from key management and tampering with ciphertext to searching encrypted data. While encryption of data in transit and at rest don’t use the same cryptography schemes, we can still learn from both to eventually create a robust solution and keep cryptographic failures at bay.

Robust encryption libraries are still a struggle

Encryption encompasses and conceals (pun intended) a multitude of intricacies that could expose vulnerabilities for attackers to exploit and compromise the encrypted data. This vulnerability often stems from the nuances of implementing encryption algorithms in code rather than from intricate mathematical breakthroughs or algorithmic weaknesses.

Even when using encryption libraries, checking their popularity and whether they have known implementation issues is essential. Selecting the appropriate encryption algorithm for your use case is important, as is considering how your performance requirements may impact your encryption strategy while maintaining its robustness.

To illustrate, consider the history of bugs in TLS, which carries the security of the internet and all HTTPS websites. It's one of the most used protocols. Achieving the level of security it has today took substantial time and expertise, even for the foremost experts in the field.

The challenge lies in more than just adopting cryptographic solutions and putting them into practice. It can be a complex endeavor, and in the following sections, we'll delve into examples that you should familiarize yourself with to prevent potential pitfalls.

Common pitfalls of data encryption that lead to sensitive data exposure

1. Employing an inappropriate block cipher mode

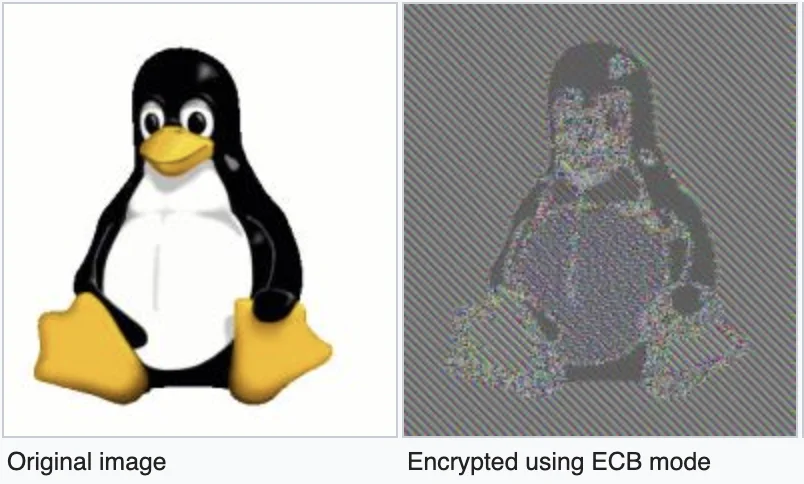

The realm of encryption boasts a multitude of algorithms, and selecting one without a solid understanding can jeopardize the security of your data. Take, for instance, the infamous blunder by Zoom when they attempted to implement their encryption, inadvertently introducing numerous vulnerabilities. In that case, they used the weak ECB block cipher mode for their network protocol instead of implementing more robust modes, such as CBC, that add more ‘noise’.

In this picture, you can see what’s wrong with the ECB.

How often do you look at the encrypted data bytes in hex and see a pattern?

2. On nonces and non-sense

Employing encryption might give you the illusion of data protection, but genuine assurance requires additional validation steps, such as incorporating a whole separated verification code or enlisting the expertise of a certified cryptography professional to scrutinize your code. It's surprisingly easy to make mistakes, sometimes even unintentionally.

For instance, imagine the inadvertent use of a fixed nonce for only specific payloads. A nonce is a random number used in cryptography to protect private communications by preventing replay attacks. This seemingly minor error, a fixed nonce, can significantly weaken your encryption without you being aware of any issues. An example of such a case was the ChaCha20-Poly1305 with long nonces vulnerability in OpenSSL, potentially exposing data.

So, use a real PRNG (pseudo-random number generator) for your nonces.

And the non-sense? In cryptography, everything must make sense. It’s really “the devil is in the details.”

3. Padding issues

It's a common oversight, but many people do not realize that properly padding a payload before encryption or digitally signing is a critical step in ensuring a secure outcome. Neglecting to pad your input can, in certain algorithms, compromise the strength of the encryption. Conversely, incorrect padding can inadvertently reveal details about the encryption key, opening the door to potential vulnerabilities, such as padding oracle attacks. These attacks are not just theoretical; they had real-world consequences starting in 2010, and even in 2019, they were still around, making them a compelling and relevant subject of study.

4. Choosing the correct encryption algorithm

Using the same key for encrypting data in certain algorithms might make it possible to break the encryption after collecting a huge amount of data. You must rotate keys and avoid weak cryptographic algorithms.

In the early days of Wi-Fi, the network encryption algorithm used WEP (Wired Equivalent Privacy), but it wasn’t equivalent at all. In fact, academic researchers managed to break it completely: it’s possible by collecting enough packets and then analyzing them to restore the encryption key using tools such as Aircrack.

5. Splitting keys or developing a multi-party computation

“I just want to make it harder for them to steal our keys,” I can hear one engineer telling another.

Splitting keys is one thing, but eventually, you need to stitch them together and then use them as input for the encryption function. If you fear that the keys will get stolen from the machine that does the encryption in the first place, you might opt for multi-party computation to solve this problem.

But even then, constructing a multi-party computation (MPC) system is not something you should do. While it may appear straightforward, the reality is far from it. Fireblocks researchers found bugs in the MPC implementation used by companies such as Coinbase and Binance.

6. Side-channel timing attacks

And then to make it worse, there is a sophisticated, almost mind-blowing class of security threats that exploit subtle variations in the time it takes for a system to execute specific operations and the information these variations leak. This type of attack watches the normal behavior of response times in the software or hardware running the encryption. As such, they are a side effect, not a bug in the code per se. However, with the right experience writing robust code that resists such attacks is surely possible.

Side-channel attack example

Leaking information from a naive password comparison in code when comparing two plain text passwords (please ignore secure hashing for now for clarity) to let somebody in a system. One password comes from the user's input, and the other is in memory. The software uses a normal memcmp function, which makes it unsafe. If an attacker can find a way to trigger the password comparison functionality tens of thousands of times, say through an API or over a network protocol, they can see that they can guess the characters one by one if they brute force them. The secret to breaking memcmp is realizing the timing issue is that it halts looping when the characters in the comparison differ. So, as long as you have a correct guess on the next character, it takes slightly longer to return a result, even if it’s a failure.

So, take the password ‘nicecat’. The attacker sends ‘a as the password’. memcmp returns immediately after comparing only the first character, as the second letter does not match. Then the attacker tries ‘b’, ‘c’, etc. When they reach ‘n’, they see a difference in the average response time of the comparison. It means they hit the right character. Now they try ‘na’, ‘nb’, ‘nc’, etc., until they hit the right password. Amazingly, it can work with encryption keys, too, in certain situations.

Data encryption at rest is useless in the cloud

Misunderstanding the value of encryption for data at rest in cloud environments is a big problem. When you need to protect data and not just check the box for security compliance’s sake, data encryption at rest has to be judged differently and therefore understood first. Data encryption at rest doesn’t help protect your customer data because it’s unlikely that anybody will steal the hard drive storing the data from an Amazon data center.

So why do we do it? Because it's old baggage and a part of security standards today.

But, by contrast, encryption of data at rest is of huge value for mobile phones. If stolen, encrypting data at rest makes it incredibly hard to obtain the plaintext data (photos, texts, etc.).

Data encryption at rest vs application-level encryption

Encryption at rest is effectively broken, as it automatically decrypts data for an application's read (query) requests. So, when an attacker manages to get inside your network and connect to the database, this protection mechanism fails as it allows them to read the data. This attack against databases is probably the most common, and that’s unacceptable.

Application-level security addresses this weakness. In this case, the attacker gets encrypted data when they read from the database. However, they can’t decipher it because they don’t have access to the keys held by the application or a vault. If they want access to the keys, they must break into the application, raising the bar.

Security by design for working with the secret keys

Proper key management in the backend system is one of the hardest things to get right. It’s not a topic covered by academia or researchers because it only occurs in real attacks when threat actors penetrate networks. However, here are some issues you should be aware of to build your system securely:

- Cryptography is not solely about encrypting data. Distributing the secret key across various components in the backend for data decryption can lead to key compromise. Any encrypted data becomes vulnerable if one component holding the secret key is compromised.

The best practice is to have a separate key for different use cases to reduce potential exposure if a key is compromised (hence the use of envelope encryption).

- When the need arises to rotate keys, you must also find secure storage for them (i.e. all the previous keys normally remain in use). However, determining where to store the particular key that encrypts a specific payload and how to manage the key used for decryption can introduce complex implementation challenges.

- Storing data encryption keys (DEKs) alongside the data can pose risks in the event of a system attack. Safeguarding these DEKs is vital. Do this by encrypting them with a master key, such as a key encryption key (KEK) from a KMS/HMS.

- It's crucial to recognize why key management systems (KMS) restrict the export of secret keys, using them exclusively for internal encryption operations.So they can never be compromised. C’est tous. Keys should not circulate freely within your systems.

- Sharing keys and secrets, even on platforms such as Slack, for debugging purposes means there’s a poorly designed system. This practice means you haven’t made it easy for your engineers to diagnose the system or complete their work.

Using keys in microservices

When working with cryptography, the application services that access the data must have the secret keys to encrypt and decrypt data. Well, this is wrong.

There’s a question of whether the key remains permanently in memory and can be leaked. But there’s also a question of how the key gets to the service. Is it through an environment variable, a KMS, or a vault?

The best practice is not to have the key at all. The key must be isolated so nobody can compromise it. Using a KMS is a good start, but the problem is that it’s relatively slow and expensive. You can read more about the difference between a vault and a KMS in AWS KMS vs Piiano Vault: Which Encryption Mechanism to Choose for Your PII Data.

Sometimes, companies attempt to separate duties between microservices to reduce risk. For example, one microservice holds the private key for decrypting data, and another holds the public key for encrypting data. This practice complicates their system, and the return on investment is unclear.

Threat modeling and architecture for working with secret keys

Before making drastic decisions on how to design your system, it’s important to consider the following:

- The threat model: What are we afraid of? Who can generally access the application? What kind of sensitive information does it keep or have access to? Confidential data at all? Credit cards, health, etc? How much damage can be done if it's compromised? For example, could the threat shut down the system or delete data?

- The attack surface of the application: Does it have public APIs that anybody can trigger? Is it a server working with some obscure network protocol that can receive malicious packets and payloads? What’s the size of the pre-authentication and post-authentication attack surface? What kind of components are accessible to an outsider attacker to leverage?

- Exploitation Possibility: What is the likelihood of a service being exploited and, therefore, compromised due to remote code execution, memory leak vulnerabilities, or any other security bug? For example, in what languages is it written? C and C++ are known for poor memory management and are more prone to security issues. Is the application internet-facing? Is it fully patched, or has it enabled the latest security mitigations? Is the service sandboxed?

Sometimes, to balance concerns of performance and costs, a more secure approach is having a small dedicated microservice (say, a vault) or sidecar alongside your container that is solely responsible for the encryption and decryption of data with minimal API set and never letting the keys out, think of a KMS on steroids.

This approach minimizes the risk of someone compromising the application and retrieving the keys, because even if they break into the application, the keys are stored elsewhere. However, you might say, “you don’t need the keys to read data because the application has permission. So, the attacker can invoke the decryption API at will”. Here you must distinguish between attacks that enable database read operations and attacks that enable arbitrary application code execution, which are less common. But, eventually, you’re right. You can address this threat with more security controls; more about that in the last section.

Data integrity and anti-tampering

Data encryption is not the same as data signing. Encryption of data doesn't prevent someone from tampering with the ciphertext data.

There’s a known technique where an attacker can manipulate an encrypted data blob and attempt to decipher its content upon decryption, potentially recovering the secret key. It’s vital to have data integrity fully protected in your implementation, especially when data is in transit or at rest and when an attacker could make multiple attempts at leaking information to break the encryption.

Exploiting weak data access controls for decrypting data

Encryption primarily serves as a means of controlling data access, determining who has the privilege and capability to access specific data. However, it's important to recognize that encryption is just one approach among many. In certain cases, simpler methods, such as tokenization, or just access checks may suffice, depending on the use case.

Furthermore, encrypted data at rest should always be resilient because even if an attacker manages to transport it within your system, they shouldn't gain unauthorized access to the data.

Picture this: you access the underlying data in a system and get to an encrypted data blob. However, you cannot decrypt it because you don’t have the access because the access control lists (ACLs) stop you. Now, consider feeding this encrypted blob into another part of the system that lets you somehow decrypt ciphertext data, but it doesn’t verify underlying assumptions (like the source of the data). In this scenario, you inadvertently circumvented the security of the data by using the system against itself.

To mitigate these risks, encrypted data should be able to identify its source, and the system must rigorously verify its origin. This approach helps prevent cross-permissions attacks, ensuring that data from other components in the system or users remains secure and inaccessible.

Application-level encryption vs column-level encryption

It’s important not to confuse these two encryption types. Column-level encryption is encryption that takes place inside the database. Application-level encryption means that code in the application is responsible for encrypting the data.

Should we prefer one over the other? Definitely.

There are major advantages to using application-level encryption:

- Application-level encryption is database implementation agnostic. You should standardize on the same encryption method and implementation in organizations with many R&D teams with different tech stacks or many data stores. And have security management for everything in one tool.

- Attackers accessing the database directly can only read ciphertext (i.e., protected) data and cannot use it as they don’t have the keys to decrypt it.

- In column-level encryption, the database engine automatically decrypts the ciphertext data for whoever has the access permissions to the table. This process means the protection method isn't very effective, as we mentioned earlier when discussing data encryption at rest.

- If you use application-level encryption for storing data in the database, you don’t necessarily need other encryption methods running underneath, such as transparent database encryption (TDE), column level encryption, or file system encryption, as they don’t add much (well, again, depends on threat modeling).

Searching encrypted data

Encrypted data is hard to search, particularly when you implement application-level encryption and can no longer rely on the database’s native search. This challenge means you must develop an encrypted-data search capability.

Technically, you don’t search encrypted data. You first index the data and then store it encrypted, but then somehow provide a way to find the relevant data quickly.

Let’s start with the simple feature.

Exact Matching

Suppose you encrypt the customer first name data and you want to search for customers with the first name ‘john’. How can you do it fast and safely? You could:

- Decrypt all names and then search over them. It is likely to perform poorly and risks exposing the data, assuming you have enough memory too to hold them all or go one by one.

- Encrypt the name you’re looking for and then have the database engine look it up for you as if it were a normal string literal. But this means you’re using deterministic encryption, which is a bad idea.

Given there’s a requirement for using a safe and strong encryption scheme - how is a more secure searching possible? Because comparing two strings isn’t going to work anymore - as each name in the table has a different ciphertext (even if it’s the same name, that’s the point of non-deterministic ciphers).

Therefore, the right way to approach it is:

- Using AES256 GCM, making sure the data can’t be tampered with, and encrypting the same data doesn’t yield the same ciphertext, a la non-deterministic encryption. And use different keys for different columns or tables.

- Employing blind indexing, which means securely hashing the plaintext data before it becomes encrypted and storing it in another separate column.

This extra blind index column does the magic for exact matches. So, before looking up an input string in the table, calculate its secure hash, and then look it up with a simple query over this index column. You use a secure hash (not to avoid collisions but) to ensure that if somebody accesses this column, they will have a hard time leaking information from it and understand what data lies behind it.

Substring Matching

Searching for a substring in encrypted data is by far harder. Building a robust and scalable encryption system that allows searching requires a lot of magic. The best approach is to use membership-querying, a well-researched topic in academia.

For example, the bloom-filter algorithm is a good approach to this problem. And, like with the blind index, it makes it harder to leak information.

This time, instead of having a single index, as for exact matching, you do many calculations. First, when inserting data strings into the table, calculate the index (bloom filter) on the broken substrings and hold a wider index field. Then, when you want to find a substring, you do a similar calculation and lookup using this index.

The issue with this approach is that you sometimes have to balance the index column to maintain efficiency, which complicates the implementation. Naturally, this index uses more storage and CPU.

Performance drawbacks

Encrypting data impacts speed and memory, but by how much? You have a few options to choose from, though practically, we’d recommend sticking to the standard. When you focus on storing data, it can significantly inflate your storage. Then you decide at what granularity to encrypt (file, cluster, sector, column, table, etc.). For example, when using application-level encryption, each encrypted blob contains padding and metadata to aid anti-tampering, but it bloats everything.

It's crucial to ensure that the chosen encryption doesn't substantially impact system performance. Either way, encryption is a must and is worth this mostly negligible performance penalty.

Choose appropriately between:

- Symmetric encryption, which is notably fast and doesn't significantly affect data storage encryption.

- Asymmetric encryption methods, such as RSA, EC, and Diffie-Hellman, are computationally intensive and slower and used mainly for key exchange in network protocols.

- Hybrid algorithms offer a compromise by using asymmetric encryption for key exchange and then transitioning to symmetric encryption, as in TLS.

- In some cases, slow and resource-intensive encryption is a deliberate choice. For example, when protecting cryptocurrencies such as Bitcoin.

Security alongside encryption

Cryptography and encryptions are tools to make systems more secure and help preserve privacy and confidentiality. Remember, encryption is a very strong access control over data. But encryption by itself isn’t enough. A few more things to consider when building a secure cloud application:

- Least privilege: Only provide services access to the resources they need. This technique helps limit any damage should the service be compromised. For example, the services in the backend shouldn’t necessarily be able to read all the data from all the systems or use all the keys in the KMS. Each service should have its own permissions, its own user to access databases and tables, its own set of keys, and its own user to access minimum data in an S3 bucket, etc.

- Data masking: Where all you need to know is that data exists, but the full value isn't essential, use data masking. Masking should include data such as credit cards, but don't forget national identification numbers, social security numbers, etc.

- Data minimization: Better than protecting data is not having the data to begin with. Your data scientists may love collecting heaps of data, but things have changed since GDPR started in 2018. So, collect as little data as possible and make sure your users know this.

- Log auditing: Record as many events as possible. They could prove helpful for understanding what’s gone on when conducting post-mortem analysis.

- Monitoring: Actively watch what’s happening inside your system. If you see anomalies or deviations from the baseline in traffic, data volume, or accesses to some machine, look into it.

Conclusion

Building robust application-level encryption is challenging. We just glimpsed the amount of mistakes and expertise it takes to build a robust encryption library that holds up against attacks. It’s an even lesser-known territory when dealing with broken implementation or weak encryption in application backends because also nobody talks much about how you should handle keys and keep them safe to avoid cryptographic failures.

There are many performance implications and functional limitations to developing encryption yourself. While it’s not most companies’ core competency, many do it repeatedly. However, as we discussed at the start of this post, it's fraught with dangers they are unaware of. They might think their data is protected, but in practice, it’s not and the weak encryption might lead to data breaches.

If you are looking for a solution that can provide out-of-the-box features to address the challenges discussed in the article, Piiano Vault might be your answer.

You can create an account for free and start using our APIs to see how you could skip the effort of figuring out implementation details, key safety, etc. Not only that, Piano Vault delivers much more, including easy data management and privacy features so you can finally relax, knowing your sensitive data is protected in the rapidly changing threat environment.

It all begins with the cloud, where applications are accessible to everyone. Therefore, a user or an attacker makes no difference per se. Technically, encrypting all data at rest and in transit might seem like a comprehensive approach, but these methods are not enough anymore. For cloud hosted applications, data-at-rest encryption does not provide the coverage one might expect.

Senior Product Owner